Introduction

WOZiTech promotes the use of Microservices to break down complex applications into easily maintained, deployed and managed components, with use of best breed technologies for each service (or in the case of WOZiTech projects, demonstration of new technologies by a given service) with scale and security built-in.

Microservices is an IT buzzword, but what are microservices? Let's demistify them here.

Isolation

Runtime

First, and foremost, microservices are isolated from each other at runtime. A failure in one service does not impact on another service. This applies not just across different services but equally each instance of the same service. This latter isolation is where "serverless functions" come into their own. To isolate each instance of a service, if a system-level error occurs in a service instance then that service instance should be rejected and a new instance of that service started to backfill.

Deployment

Second, microservices are isolated from each other during deployment. A failure in one service deployment does not impact upon other services, during or after the deployment. More than just each service can be deployed independently, each service can be deployed to different targets/platforms.

Packaging

Third, microservices are isolated from each other through packaging. A change in one service does not impact

upon other services. A change in one service which consequently requries deployment, does not require the

re-deployment of other services.

Isolated packaging ensures the smallest scope of deployment and consequently, the smallest scope of change. Low

level of changes encourages high levels of availability.

Consider also service maturity; where the microservice is extended over time.

Versioning of APIs are common practice; a typical node application uses npm

to manage 3rd part libraries, all at specific versions within package.json. Every microservice should be

versioned and every client should define the version of API to which they are subscribing to, even if that

client declaration is "latest" (version).

A stable service is one that stable clients will depend upon. If having to make changes to a stable service

consider first creating and deploying a new service with those chamges, thus isolating the existing stable service.

Over time as this new service establises itself and itself becomes stable, consider then whether to

merge it back to the original service (as a new verion of).

But ensure you always version your own microservices; even if this is just to track and audit the version that

is deployed, but because your microservice will be consumed by others and they should trust the version of

the microservice that they come to rely upon. And yes, logging the version that is being consumed is important

is wanting to be able to phase out old versions (you have know know which versions are in use and by which consumer).

Data

And finally, it is well accepted that microservices should own their data in its entirety. Each microservice

should have its own data store; that data store chosen specifically for the type of data it owns (or abstracts

in the case of integrating services).

A caching microservice would benefit from fast storage, such as, redis/memcached. An analytics service would

benefit from a warehouse style store.

By having its own store of data and owning that store, each microservice is able to apply its own optimisations

for accessing that, safe that it does not impact other services.

But there is a playoff on how much isolation can be supported and this vary across projects and services.

Especially when transitioning from a monolithic application or integrating with legacy applications (oh yes,

there are still many legacy billing, inventory and fulfilment applications out there), it will be necessary to

make tactical decisions to share a data store/legacy service. When doing so, be sure to have an exit strategy/lifecycle

for that service and be sure that the data/service it access is unique to it; this applies more with create/update services

than pure retrieval.

The purist principle of microservices "no share" can indeed suggest NO code reuse, such as, common logging, monitoring

authentication/authorisation functionality. There is no right or wrong answer, with benefits and drawbacks to both. A tactical

decision must be taken, but one that stems from an agreed (a documented) system design principle. If accepting the decision to

share code, ensure that code has its own lifecycle management (own repository, build and automated packaging) with

versioning. All microservices must be explicit in their use of versions.

To note, AWS has recently (circa Nov 2018) released Lambda Layers;

the ability deploy common artifacs across functions. Versioning of the layer is implicit in the definition of the layer's ARN

and the version is always incremented when deploying that layer.

Security Built-In

Not specific to microservices per se, but a mandatory characteristic as seen by WOZiTech, is that all microservices should enforce security for all their APIs; even if that security is simply to "allow all".

There are many different approches to authentication (identity) and authorisation (approval); we shall shorten this to simply A&A. The fact that microservices are stateless implies A&A must be performed on every call. For performance and isolation, it is not ideal for any microservices to have to rely on an exteral lookup for A&A resolution.

Token based A&A is the recommended approach as the token assures identity (authentication) and contains all information necessary for approval (authorisation).

Best Choice of Technologies

The IT industry is blessed with a multitude of technologies, each with their own evangelists and practioners. Some argue why there are so many different technologies and arguments have ensued whilst weighing up one technology over another with facts and passion. Some argue the birth of some technology is unnecessary. If you have one web server, why do you need another? If you have one computer language, why another? To counter the last argument, consider if you will a hardware store and the choice of screwdrivers available; surely, if you want just one screwdriver, why are their hundreds of screwdrivers to choice from?

But projects struggle to cope with a multitude different technologies; often limitations have been knowingly imposed based on the choice of technology made at the start of a project/product. With multiple technologies you have issues on skills availability, deployment and integration, especially when creating large complex applications with production-level deployment. Service Oriented Architecture (SOA) came to solve the issue of heterogenous application environments by integrating disparate applications (components) with an Enterprise Service Bus (ESB) or Broker (centralised/federated integration). You could continue to invest in legacy monolithic applications, yet build new more efficient web interfaces with data analytics and user profiling services.

SOA is complex and requires highly skills architects and designers, and requires the deployment and support of a platform on which to run SOA with the integration of that SOA platform with service endpoints (new and legacy alike). It requires a team of highly skilled engineers to run just the SOA platform itself.

With the rise of containers over the past years, fueled by kuberenetes and now capable approach of application deployment as containers, support for diverse technologies on consolidated platforms has never been easier. With a docker image you can quickly introduce services to your platform from both internal and external sources.

Microservices have segued from traditional SOA and pure docker solutions by continuing

to promote best choice of technology but simplifying implementation by adopting the ubiquitous web protocol.

The traditional ESB with its plethora of adaptors to connect to new and legacy applications/data stores and its complexity?

Gone. Every service is a simple web endpoint.

Database connectors (PostgreSQL, MySQL/MariaDB, SQLServer, DB2), messaging connectors (CICS, MQSeries, JMS),

email (pop, imap, smtp). All gone.

Proprietary scaling and failover solutions. Gone. Replaced with well understood http load balancing.

Proprietary security solutions. Gone. Replaced with TLS (SSL) and their certificates.

As Small as Can Be

Akin to the best choice of technology, as the name suggests, microservices should be as small as possible.

What is meant by small? Small here means "only essential" footprint. Include only what is needed:

- When packaging for Lambda, use webpack and babel-polyfill to create a single JS file to package and upload.

- When using docker images, start with the smallest runtimes: CoreOS or Alpine.

- When encapsulating data, consider first a in-memory or embedded database (redis/SQLlite).

- If needing a web/app server, use the lightest necessary; a simple function does not require a comprehensive node.js frameowrk like express/KOA/nest.js.

Scaling

Scaling is the ability to grow an application as demand is imposed on the application.

Traditional methods were focused on vertical scaling (buy a bigger/faster box; physical or virtual). More dyanamic is horizontal scaling; two, three, four or more boxes (phyical or virtual). Horizontal scaling requires balancing load across each instance. Balancing algorithms like, round-robin, random, least-connection (used) and least-load (weighted response time) are used to spread incoming connections. Horizontal scaling has the added bonus of failover and resiliency. But to benefit from horizontal scaling, your application needs to be aware. Moreso, you need to handle state across multiple instances; get this wrong and any benefit of horizontal scaling is lost.

With the introduction of cloud computing, moreso, public clouds, can adaptive load balancing; scale up yet also scale down, based on incoming demand. You then only pay for what you used. Large boundaries were placed on the increments/decrements owing to the time it took to start a virtualised server or stop. With Docker and containers, it got quicker to start and stop; scale up and scale down became the norm. But it was still pretty much full application instances that were being scaled horizontally.

With the prevelance of docker came kuberenetes; managed instances. It is now easy to provision 10 of those, 20 of them and 5 of these. What are "those, them and these"? They are instances of part of the application; 10 web servers, 20 app servers and 5 database servers. As demand increases, those 10 web servers and 20 app servers are scaled with different ratios depending on the applicaton resources consumed. But break the application into components. "those, them and these" now become 20 instances of order services, 10 instances of payment servies and 5 instances of product cache. But not just three ratios to manage, you now have tens/hundreds of different ratios (application clusters) to scaling up and scaling down with different cadence based on application demand. This is can be visualised as a 'Murmuration of Starlings'; a swarm of birds sweeping and turning in the sunset sky with individuals peeling off and joining at different times. But this being a set of containers not starlings, and the sky being a kubernetes cluster.

Microservices is taking this approach to the extreme. Each microservice can be scaled up/down. The fine grained microservices being more functions than components. Moreso than before, the microservice must be packaged & deployed in a way to benefit from this scaling approach. The microservice needs to be packaged as a Docker template/image.

Serverless microservices simply takes away any need to manage the provision of resources but it does restrict the technology that can be provisioned based on that made available by the cloud provider. Although note, AWS serverless functions (lambda) does support the notion of a docker image/template as the ultimate "get out clause".

Reverse Proxy/Gateway

Even with their isolation, microservices should not be accessed directly. As a minimum, all microservices should be accessed through a reverse proxy; nginx/Apache make for great reverse proxies with little overhead. Doing so, allows for a single name endpoint for all microservices, but moreso, allows for canary style or blue/green deployments to phase in modified/new services. The reverse proxy makes it easy to scale up instances (auto attach to the reverse proxy) but also add increased separation (for example, the same microservice running in multipe cloud providers).

As your microservice landscape grow in maturity, your reverse proxy will take on more and more functionality. You'll find it performing gateway style functionality (offloading authenication, circuit-breaking, throttling, geo-nearest resolution, ...). If your reverse proxy is doing more than simple redirection, it's time to look at API Gateways, the likes of:

Microservices Are Not ...

As significant as it is in defining what microservices are, it is necessary to describe what they are not. Unlike SOA, microservices are fine grained (SOA tends to course grained services) and do not set about to encourage reuse. Microservices have no formal contract. There are no dependencies between microservices and no "managed" orchestration between microservices. Microservices depend on no specific runtime platform specific.

What do we mean by "unmanaged orchestration"?

We are not saying that microservices are not part of a larger business process, as typified of a service environment. But their involvement in such is directed by the consumer of the microservice. There is no underlying abstraction coordinating their involvement.

What do we mean by "not platform specific"?

The very essence of microservices is they leverage the

ubiquitous web protocol. Even mainframes can support the web protocol. All modern platforms support the web protocol.

There is no open source/community (e.g. mulesoft/fuse) or expensive commercial (IBM Message WebBroker, Tibco, Oracle)

SOA platform. You can use different platforms in each of your environments. You can use platforms supported natively

by cloud providers and within the same environment spread those microservices across multiple providers (providing

resilience from that cloud provider). Because microservices are not constrained by any platform, your

3rd party suppliers/agencies are free from any particular choice you have made.

Of course, if you already have a SOA platform, it can host microservices (all SOA platforms will support web protocol).

For a SOA that is heavy on message, it is worth highlighting STOMP

over WebSockets can be used for a bidirectional messaging interface over http as a method of integrating SOA with microservices.

By not being dependent on any specific platform, not are are you free to choose, you are free to change! Make a choice and run with

it. But if it"s no longer working, then change your platform of choice. And yes, you are free to change your 3rd parties too.

What do we mean by "not encouraging reuse"?

With a SOA implementation, much time is invested upfront to decompose business domains into reusable set of services. Those services (components) are like mini-applications; where it makes sense to consolidate a particular business function,

it is grouped within a service (component).

By name alone (micro), microservices should be small, function (not component) based services.

It is not important if they are used in more than one place; what is important is that the function they perform has been isolated.

As noted above, to protect service availability, if making a substantial change to an existing microservice you should

be encouraged to create a new microservice.

But there is nothing wrong if indeed the microservice is (re)used in many different scenarios.

What do we mean by "no formal contract"?

To encourage interoperability between services in a SOA implementation, it is necessary to focus on the contract

of the service. That contract being a description of what features that services provides. The contract

is then registered allowing that service to become discoverable.

Such a constract would be based on standardised contracts; remember the WS* standards (WS-Security, WS-Transer, WS-Addressing, ...).

These contracts are verbose and complex covering a great number of use scenarios. Implementing a service conforming to such

standards requires a lot of effort.

That is not to say that microservices do not have a contract. They have a service definition (typically

RESTful style well-documented using swagger). But rather than based on some complex standard, the service

definition (contract) is specific to the task (function) at hand. With evolutionary development of the

microservice, this informal contract will change. That change should be via contract (interface specification

versioning).

The WOZiTech preferred approach is with Media Type versioning.

But be aware, when changing the Content-Type header, the client needs to be version aware and parse the

content type appropriately. For example, in the linked example, "Content-Type: application/vnd.myname.v2+json"

needs to be parsed (split on '+') to resolve "json". Web Browers (circa Oct2018) are

not aware, and consequently, if using your web browser to test results, they will not formally recognise JSON content.

Because of this absence of formalised contract and abstraction orchestration, it is necessary that the

consumer (client) is specific about version of microservice it uses but also then when testing, the

"in use" contract is tested in isolation from the service logic (best tested with unit tests and mocking).

What do we mean by "fine grained"?

Microservices are constructed at function level whereas SOA is component level.

A large application could have been composed of 10 or 20 SOA services, compared to 100 or 200 microservices.

A component will have many functions, whereas a microservice has a single (maybe two/three) function.

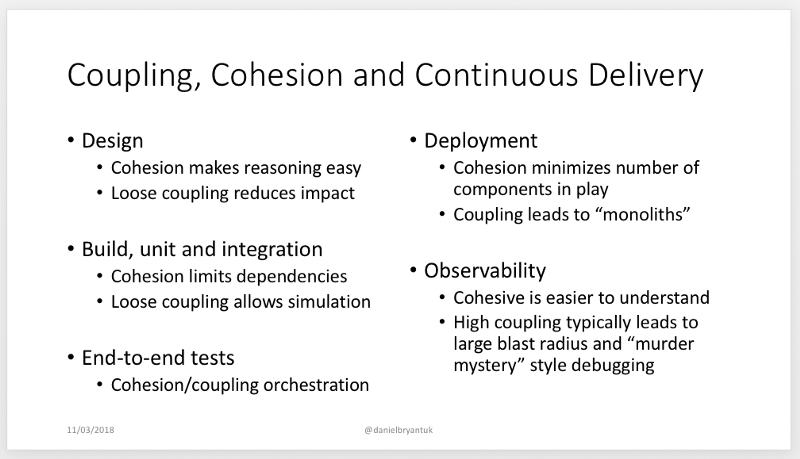

A microservice exhibits "high cohesion" (everything within the microservice is specifically related).

A great article around microservices testing from

Daniel Bryant describes the impact and consequence of coupling and cohesion, nicely summarised by the

image below.

Challenges

Microservices are not the silver bullet; they are simply an architectural approach/style and as with any such approach they have limitations and deficiencies.

CI/CD

Function-level deployment implies that in any sizable project you will have many microservices and you can expect to change each many times over its lifetime. You will also want to get the changes into live operations as quick as you can. For that, you need a good Continuous Integration, Continuous Deployment and Continuous Delivery solution. As soon as code is committed, automated unit and integration tests are performed, automated builds and deployments into acceptance and regression tests environments and automated (ungated yet managed - canary style, green/blue) delivery into live service all with the ability to regress/recall at anytime if any unwanted side effects are observed.

Agile Delivery

Through less focus on contract, reuse and with a focus on function not component, less effort is spent designing microservices

with good effort spent on Implementing microservices on the premise that 'anything can change'.

This makes microservices more suited to an agile development lifecycle.

Yes, you could spend months decomposing your problem domain, designing and specifying all such functions, then coding, building and deploying

such functions and then finally constructing business logic from the deployed functions. Just like SOA.

Moreso, you should simply develop your microservices (functions) whilst developing business logic; there is no formal contract for the

microservice.

Knowing only what you know at time, and doing only what you know at the time; this is agile delivery.

Monitoring

With tens/hundreds of microservices deployed, with runtime isolation (see above) across multiple business proceses

you have a complex function runtime with independent runtime characteristics.

Traditional tools to profiling and optimising those characteristics do not fit well when the services are distributed

within a platform or worse still across different platforms.

Without a good monitoring solution in place, you're assuming everything we be ok and when it is not, you'll be

frantically or worse still, haphazardously, hacking away to identity and then fix the problem. You are likely to make

the problem worse before it becomes better.

But what are the solutions? Still in infancy but some stop players already emerging:

Patterns

Introduction to microservices patterns

- Microsoft Patterns

- Open Source Patterns supported by Kong

Backend for Frontends

Stuff on backends for Frontends pattern